Load Balancers: Optimizing System Performance and Availability

Load Balancers: Optimizing System Performance and Availability

Introduction

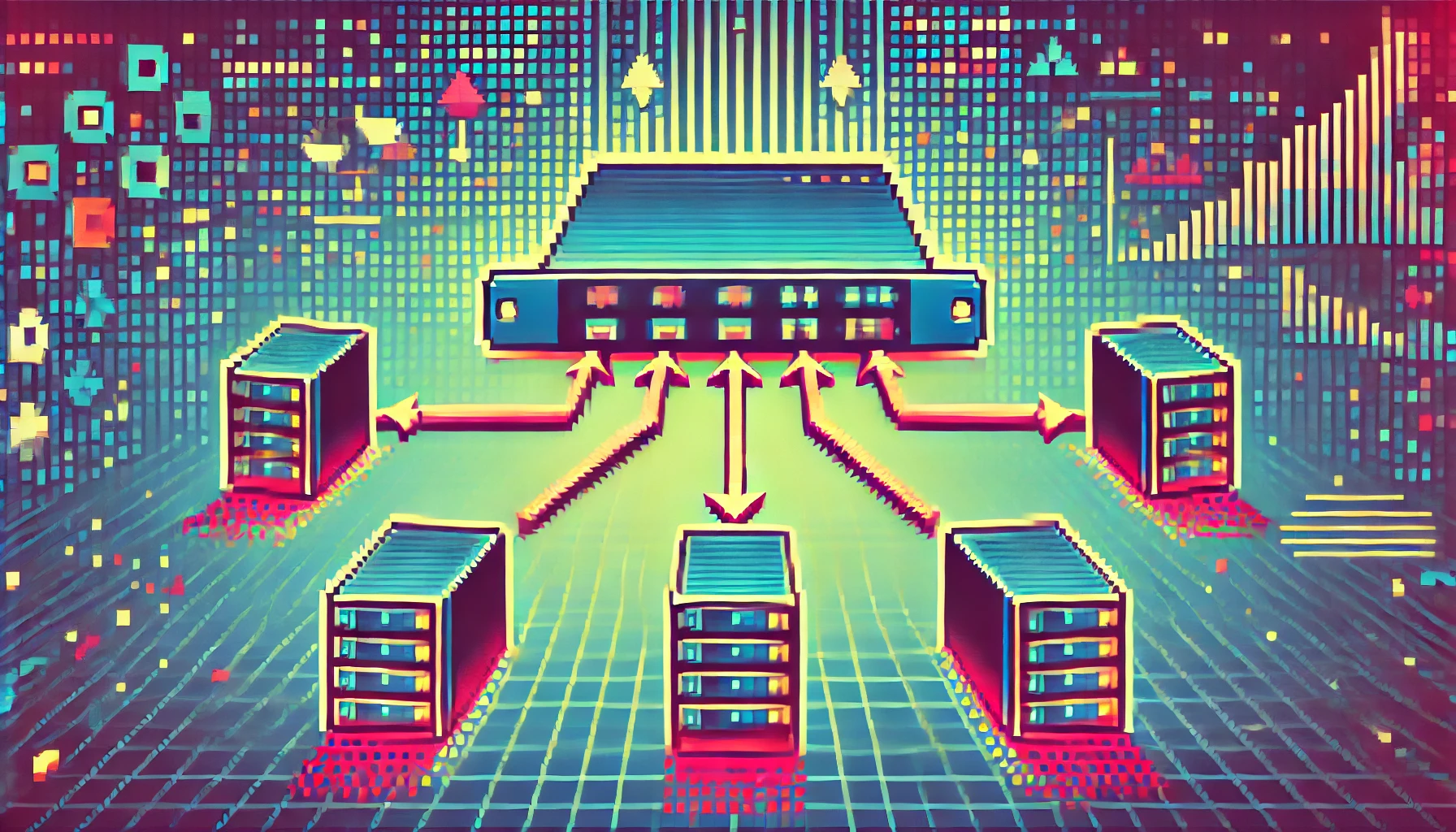

In today’s technology-driven world, where applications and websites must handle millions of simultaneous requests, load balancers have become crucial components of IT infrastructure. These devices or software efficiently distribute incoming traffic among multiple servers, enhancing the performance, availability, and reliability of applications.

What is a Load Balancer?

A load balancer is a device or software that acts as a “traffic director” for a network. Its primary function is to distribute incoming requests (such as website visits or API calls) among several servers capable of handling these requests.

Why are Load Balancers Important?

Performance Improvement: By distributing the load across multiple servers, it reduces the burden on each individual server, leading to faster response times.

High Availability: If one server fails, the load balancer redirects traffic to the remaining operational servers, ensuring continuous service.

Scalability: Load balancers make it easier to add or remove servers based on demand, facilitating horizontal scaling.

Flexibility: They allow for maintenance on individual servers without disrupting the overall service.

How Do Load Balancers Work?

Load balancers use various algorithms to determine how to distribute incoming requests. Some common methods include:

- Round Robin: Requests are distributed sequentially to each server in turn.

- Least Connections: New requests are sent to the server with the fewest active connections.

- IP Hash: The client’s IP address is used to determine which server receives the request, ensuring that a client always connects to the same server.

Types of Load Balancers

- Hardware Load Balancers: Physical devices optimized for handling high traffic volumes.

- Software Load Balancers: Applications that can be installed on standard servers or cloud instances.

- Layer 4 Load Balancers: Operate at the transport layer, making routing decisions based on IP addresses and TCP/UDP ports.

- Layer 7 Load Balancers: Operate at the application layer, capable of making more complex routing decisions based on the content of the request.

Examples of Load Balancing in Action

Example 1: E-commerce Website

Imagine an online store that experiences a surge in traffic during a holiday sale. Without a load balancer, all requests would go to a single server, potentially causing it to crash under the heavy load.

With a load balancer:

[Users] -> [Load Balancer] -> [Server 1]

-> [Server 2]

-> [Server 3]

The load balancer distributes incoming requests across multiple servers, ensuring that no single server becomes overwhelmed. This setup allows the e-commerce site to handle the traffic spike without performance degradation.

Example 2: Global Content Delivery

A popular news website serves content to users worldwide. By using DNS-based load balancing, the site can direct users to the geographically nearest server:

User in New York -> [Load Balancer] -> [North America Server]

User in London -> [Load Balancer] -> [Europe Server]

User in Tokyo -> [Load Balancer] -> [Asia Server]

This approach reduces latency and improves the user experience by serving content from the closest available server.

Implementing Load Balancing

Here’s a simple example of how you might set up a basic load balancer using NGINX:

| |

In this configuration, NGINX will distribute incoming requests across the three backend servers defined in the upstream block.

Conclusion

Load balancers play a vital role in modern web architecture, ensuring high availability, improved performance, and efficient resource utilization. By distributing traffic across multiple servers, they help create robust, scalable systems capable of handling high volumes of requests. As applications continue to grow in complexity and scale, the importance of effective load balancing will only increase.